| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| 8 | 9 | 10 | 11 | 12 | 13 | 14 |

| 15 | 16 | 17 | 18 | 19 | 20 | 21 |

| 22 | 23 | 24 | 25 | 26 | 27 | 28 |

- kolla

- awx

- Docker

- KVM

- Octavia

- OpenStack

- yum

- Kubernetes

- cloud-init

- grafana-loki

- repository

- nfs-provisioner

- kolla-ansible

- k8s

- i3

- ceph

- cephadm

- archlinux

- libvirt

- golang

- HTML

- port open

- Linux

- Ansible

- ceph-ansible

- Arch

- ubuntu

- pacman

- terraform

- Kubeflow

- Today

- Total

YJWANG

kubeflow installation (On-premise / kfctl_k8s_istio.yaml) 본문

kubeflow installation (On-premise / kfctl_k8s_istio.yaml)

왕영주 2021. 1. 18. 14:15Refer to

- https://www.kubeflow.org/docs/started/k8s/kfctl-k8s-istio/

- https://kubernetes.io/ko/docs/tasks/administer-cluster/change-default-storage-class/

- https://cloudarchitecture.tistory.com/43

- https://github.com/kubeflow/manifests/issues/959

Provisioner 구성

NFS Provisioner로 구성했으며 아래 포스팅의 처음 부분 참조합니다.

(NFS Option에 no_root_squash 필요)

https://yjwang.tistory.com/entry/Jenkins-on-Kubernetes-NFS-Dynamic-PV

[root@master01 ~]# kubectl get all -l app=nfs-pod-provisioner

NAME READY STATUS RESTARTS AGE

pod/nfs-pod-provisioner-5cf8f66656-rgsq7 1/1 Running 0 116s

NAME DESIRED CURRENT READY AGE

replicaset.apps/nfs-pod-provisioner-5cf8f66656 1 1 1 116sStorageClass를 default로 지정

StorageClass yaml파일에도 이와 같이 지정하면 별도 작업 없이 kube apply 시 적용됨.

[root@master01 nfs_provisioner]# kubectl patch storageclass nfsprov -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

[root@master01 nfs_provisioner]# kubectl get storageclass

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfsprov (default) nfs-prov Retain Immediate false 2m13sDefault Storage Class 자동 매핑을 위해 admission plugin을 enable 해주어야 함

아래와 같이 파일을 변경하면 자동으로 pod가 재실행됨. 모든 master에 작업 진행

[root@master01 nfs_provisioner]# cat /etc/kubernetes/manifests/kube-apiserver.yaml |grep Default

- --enable-admission-plugins=NodeRestriction,DefaultStorageClass

[root@master01 ~]# kubectl get pod -n kube-system -l component=kube-apiserver

NAME READY STATUS RESTARTS AGE

kube-apiserver-master01 1/1 Running 0 4m13s

kube-apiserver-master02 1/1 Running 0 83s 이후 TEST PVC 생성

StorageClassName이 명시되지 않아도 pv가 'StorageClass = nfsprov(default)' 로바인딩 돼야함

[root@master01 nfs_provisioner]# cat 03-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: sample

namespace: default

spec:

# storageClassName: nfsprov # SAME NAME AS THE STORAGECLASS

accessModes:

- ReadWriteMany # must be the same as PersistentVolume

resources:

requests:

storage: 10Gi

[root@master01 nfs_provisioner]# kubectl apply -f 03-pvc.yaml

persistentvolumeclaim/sample created

[root@master01 nfs_provisioner]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

sample Bound pvc-3ec1d444-2bc7-4d73-8728-a485af0f4267 10Gi RWX nfsprov 3sKubeFlow 설치

kubeflow 설치 시 아래와 같은 두 가지 방식이 있다.

- kfctl_k8s_istio.yaml : istio + kubeflow 설치로 별도 인증이 제공되지 않고 접속 시 바로 이용 가능

- kfctl_istio_dex.yaml : dev + istio + kubeflow 설치로 사용자 인증으로 인한 Multi-User 환경 구축 가능

본 포스팅에선 위에 kfctl_k8s_istio.yaml 로 진행할 예정이며 아래 방식은 다른 포스팅에서 다룰 예정이다.

Client 설치 및 환경 설정

[root@master01 kubeflow]# wget https://github.com/kubeflow/kfctl/releases/download/v1.2.0/kfctl_v1.2.0-0-gbc038f9_linux.tar.gz

--2021-01-18 02:43:23-- https://github.com/kubeflow/kfctl/releases/download/v1.2.0/kfctl_v1.2.0-0-gbc038f9_linux.tar.gz

Resolving github.com (github.com)... 52.78.231.108

...

[root@master01 kubeflow]# tar -xvf kfctl_v1.2.0-0-gbc038f9_linux.tar.gz

./kfctl

[root@master01 kubeflow]# cat kubeflow.env

# The following command is optional. It adds the kfctl binary to your path.

# If you don't add kfctl to your path, you must use the full path

# each time you run kfctl.

# Use only alphanumeric characters or - in the directory name.

export PATH=$PATH:"/root/kubeflow"

# Set KF_NAME to the name of your Kubeflow deployment. You also use this

# value as directory name when creating your configuration directory.

# For example, your deployment name can be 'my-kubeflow' or 'kf-test'.

export KF_NAME=kf-test

# Set the path to the base directory where you want to store one or more

# Kubeflow deployments. For example, /opt/.

# Then set the Kubeflow application directory for this deployment.

export BASE_DIR=/root/kubeflow

export KF_DIR=${BASE_DIR}/${KF_NAME}

# Set the configuration file to use when deploying Kubeflow.

# The following configuration installs Istio by default. Comment out

# the Istio components in the config file to skip Istio installation.

# See https://github.com/kubeflow/kubeflow/pull/3663

export CONFIG_URI="https://raw.githubusercontent.com/kubeflow/manifests/v1.2-branch/kfdef/kfctl_k8s_istio.v1.2.0.yaml"

[root@master01 kubeflow]# source kubeflow.env

[root@master01 kubeflow]# env |grep KF

KF_DIR=/root/kubeflow/kf-test

KF_NAME=kf-testSet up and deploy Kubeflow

모든 Control Plane에 적용. 이를 적용해 주어야 istio가 api token을 받아와서 인증 후 동작할 수 있다.

master서버가 2대이기에 2대 전부 아래와 같이 적용했다. 적용하면 apiserver pod가 자동으로 재시작되므로 재시작 작업은 필요 없다.

만약 운영 환경이라면 한대씩 진행하길 바란다.

[root@master01 ~]# cat /etc/kubernetes/manifests/kube-apiserver.yaml

spec:

containers:

- command:

...

- --service-account-signing-key-file=/etc/kubernetes/ssl/sa.key

- --service-account-issuer=kubernetes.default.svcDeploy 진행

[root@master01 kubeflow]# mkdir -p ${KF_DIR}

[root@master01 kubeflow]# cd ${KF_DIR}

[root@master01 kf-test]# kfctl apply -V -f ${CONFIG_URI}

...

deployment.apps/spartakus-volunteer created

application.app.k8s.io/spartakus created

INFO[0149] Successfully applied application spartakus filename="kustomize/kustomize.go:291"

INFO[0149] Applied the configuration Successfully! filename="cmd/apply.go:75"배포 확인

[root@master01 kf-test]# kubectl get svc -A

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

cert-manager cert-manager ClusterIP 10.233.49.28 <none> 9402/TCP 3m13s

cert-manager cert-manager-webhook ClusterIP 10.233.9.214 <none> 443/TCP 3m13s

default kubernetes ClusterIP 10.233.0.1 <none> 443/TCP 2d18h

istio-system cluster-local-gateway ClusterIP 10.233.8.203 <none> 80/TCP,443/TCP,31400/TCP,15011/TCP,8060/TCP,15029/TCP,15030/TCP,15031/TCP,15032/TCP 3m17s

istio-system istio-citadel ClusterIP 10.233.4.248 <none> 8060/TCP,15014/TCP 3m29s

istio-system istio-galley ClusterIP 10.233.11.204 <none> 443/TCP,15014/TCP,9901/TCP 3m29s

istio-system istio-ingressgateway NodePort 10.233.17.64 <none> 15020:31555/TCP,80:31380/TCP,443:31390/TCP,31400:31400/TCP,15029:31219/TCP,15030:30783/TCP,15031:32035/TCP,15032:32060/TCP,15443:30908/TCP 3m29s

istio-system istio-pilot ClusterIP 10.233.52.249 <none> 15010/TCP,15011/TCP,8080/TCP,15014/TCP 3m29s

istio-system istio-policy ClusterIP 10.233.36.122 <none> 9091/TCP,15004/TCP,15014/TCP 3m29s

istio-system istio-sidecar-injector ClusterIP 10.233.19.24 <none> 443/TCP,15014/TCP 3m29s

istio-system istio-telemetry ClusterIP 10.233.7.185 <none> 9091/TCP,15004/TCP,15014/TCP,42422/TCP 3m29s

istio-system prometheus ClusterIP 10.233.4.35 <none> 9090/TCP 3m29s

knative-serving activator-service ClusterIP 10.233.61.122 <none> 80/TCP,81/TCP,8008/TCP,9090/TCP 88s

knative-serving autoscaler ClusterIP 10.233.53.213 <none> 8080/TCP,8008/TCP,9090/TCP,443/TCP 87s

knative-serving controller ClusterIP 10.233.18.39 <none> 8008/TCP,9090/TCP 87s

knative-serving istio-webhook ClusterIP 10.233.23.233 <none> 9090/TCP,8008/TCP,443/TCP 88s

knative-serving webhook ClusterIP 10.233.16.156 <none> 9090/TCP,8008/TCP,443/TCP 88s

kube-system coredns ClusterIP 10.233.0.3 <none> 53/UDP,53/TCP,9153/TCP 2d18h

kubeflow admission-webhook-service ClusterIP 10.233.3.85 <none> 443/TCP 97s

kubeflow application-controller-service ClusterIP 10.233.16.61 <none> 443/TCP 3m40s

kubeflow argo-ui NodePort 10.233.29.243 <none> 80:31036/TCP 98s

kubeflow cache-server ClusterIP 10.233.29.218 <none> 443/TCP 98s

kubeflow centraldashboard ClusterIP 10.233.3.14 <none> 80/TCP 98s

kubeflow jupyter-web-app-service ClusterIP 10.233.2.167 <none> 80/TCP 98s

kubeflow katib-controller ClusterIP 10.233.33.18 <none> 443/TCP,8080/TCP 97s

kubeflow katib-db-manager ClusterIP 10.233.28.233 <none> 6789/TCP 98s

kubeflow katib-mysql ClusterIP 10.233.41.246 <none> 3306/TCP 98s

kubeflow katib-ui ClusterIP 10.233.7.252 <none> 80/TCP 97s

kubeflow kfserving-controller-manager-metrics-service ClusterIP 10.233.34.244 <none> 8443/TCP 78s

kubeflow kfserving-controller-manager-service ClusterIP 10.233.16.77 <none> 443/TCP 78s

kubeflow kfserving-webhook-server-service ClusterIP 10.233.21.230 <none> 443/TCP 78s

kubeflow kubeflow-pipelines-profile-controller ClusterIP 10.233.57.249 <none> 80/TCP 97s

kubeflow metadata-db ClusterIP 10.233.7.129 <none> 3306/TCP 97s

kubeflow metadata-envoy-service ClusterIP 10.233.4.0 <none> 9090/TCP 97s

kubeflow metadata-grpc-service ClusterIP 10.233.25.123 <none> 8080/TCP 97s

kubeflow minio-service ClusterIP 10.233.46.67 <none> 9000/TCP 97s

kubeflow ml-pipeline ClusterIP 10.233.60.178 <none> 8888/TCP,8887/TCP 97s

kubeflow ml-pipeline-ui ClusterIP 10.233.47.28 <none> 80/TCP 97s

kubeflow ml-pipeline-visualizationserver ClusterIP 10.233.24.196 <none> 8888/TCP 97s

kubeflow mysql ClusterIP 10.233.49.3 <none> 3306/TCP 97s

kubeflow notebook-controller-service ClusterIP 10.233.20.8 <none> 443/TCP 97s

kubeflow profiles-kfam ClusterIP 10.233.45.15 <none> 8081/TCP 97s

kubeflow pytorch-operator ClusterIP 10.233.41.79 <none> 8443/TCP 97s

kubeflow seldon-webhook-service ClusterIP 10.233.55.52 <none> 443/TCP 97s

kubeflow tf-job-operator ClusterIP 10.233.62.39 <none> 8443/TCP pvc 정상 매핑 확인

[root@master01 kf-test]# kubectl get pvc -A

NAMESPACE NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

default sample Bound pvc-3ec1d444-2bc7-4d73-8728-a485af0f4267 10Gi RWX nfsprov 7m34s

kubeflow katib-mysql Bound pvc-a9827321-3ae8-42be-9b84-ff2a3d5b7469 10Gi RWO nfsprov 2m5s

kubeflow metadata-mysql Bound pvc-e0ce8682-c69e-4e76-89dd-ffd374728181 10Gi RWO nfsprov 2m5s

kubeflow minio-pvc Bound pvc-a9d18a55-e7ba-429d-90a3-92cc50b88c0a 20Gi RWO nfsprov 2m5s

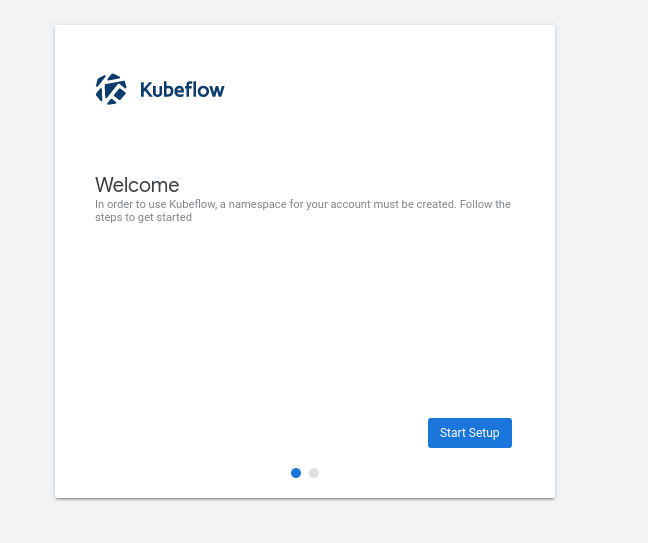

kubeflow mysql-pv-claim Bound pvc-ad288d69-bda3-4836-ab79-d599fa9d2db3 20Gi RWO nfsprov 2m5sWeb UI 접속

istio-ingressgateway를 통해 접근하며 80에 매핑된 NodePort (31380) 로 접근

[root@master01 ~]# kubectl get svc -n istio-system istio-ingressgateway

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

istio-ingressgateway NodePort 10.233.56.146 <none> 15020:32072/TCP,80:31380/TCP,443:31390/TCP,31400:31400/TCP,15029:30877/TCP,15030:31238/TCP,15031:30581/TCP,15032:31657/TCP,15443:30617/TCP 32mTroubleShooting

- PVC pending 현상 : provisioner가 정상적으로 구성됐는지 우선 확인한다.

- istio-token 을 찾을 수 없음 , mysql 및 다수의 pod가 실행되지 않음 :

/etc/kubernetes/manifests/kube-apiserver.yaml수정 진행했는지 확인 - metadata-writer pod가 정상적으로 실행되지 않음 : CPU가 avc를 지원하지 않으면 Tensoflow 관련 pod 문제 발생함

https://www.kubeflow.org/docs/other-guides/troubleshooting/

VM으로 테스트 구성을 해보는 경우 vCPU가 avc를 지원하도록 설정한다.

[root@master01 kf-test]# grep -ci avx /proc/cpuinfo

0

...

kubeflow metadata-writer-756dbdd478-whk26 1/2 CrashLoopBackOff 7 12m구축 완료